Project 1: Threads

Threads

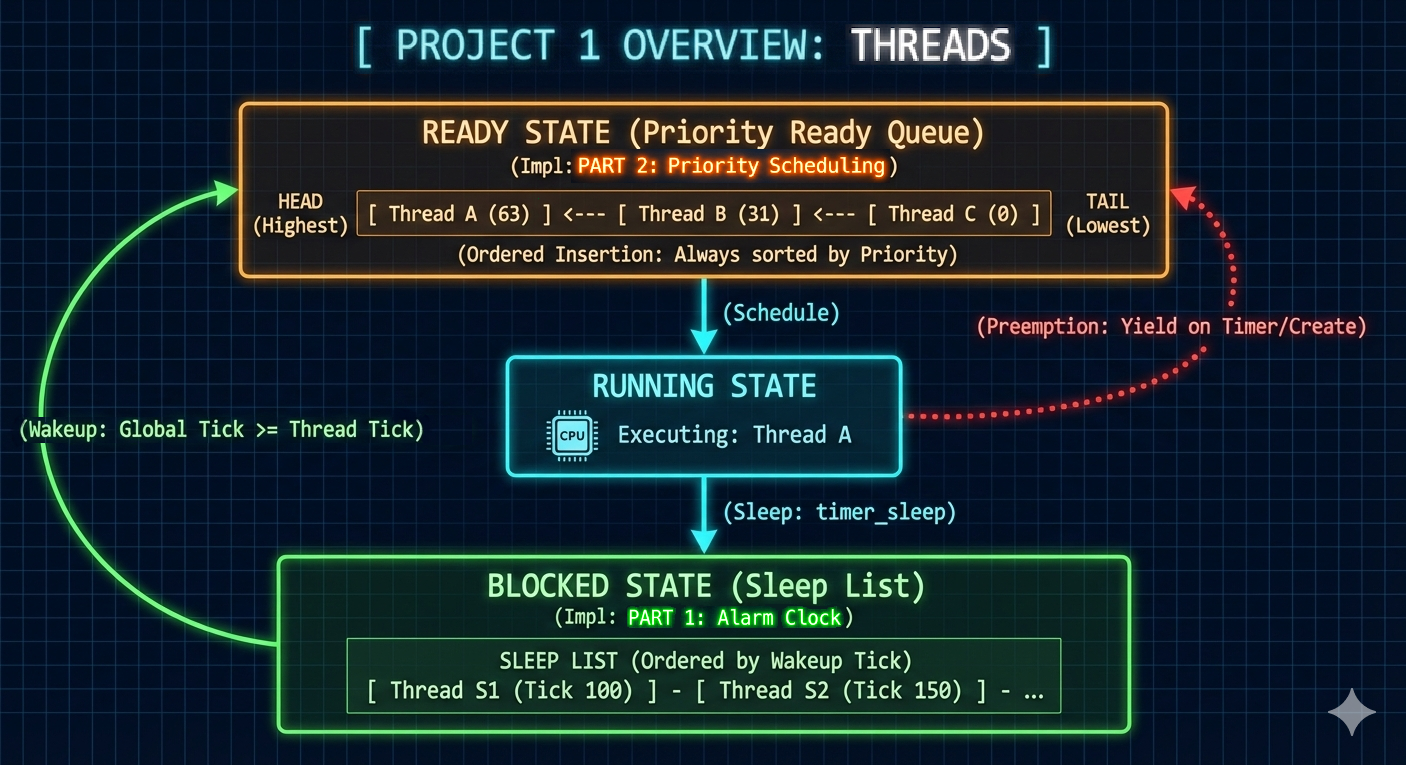

- The first project involved two significant advancements to the PintOS:

- Optimization of the alarm clock mechanism.

- Implementation of a priority-based scheduling algorithm incorporating preemption and priority donation.

-

Below, I outlined the objectives, challenges, solutions, and implementations for each component.

Part 1: Alarm Clock

Objective

- The primary objective of this enhancement is to optimize the

timer_sleep()function within PintOS by transitioning from an inefficient busy-waiting paradigm to a more efficient sleep/wakeup mechanism. - This optimization involves two key components:

- Utilizing the

THREAD_BLOCKEDstate:- Instead of actively consuming CPU cycles, threads will enter a blocked state when sleeping, allowing the CPU to be allocated to other tasks.

- Introducing a

sleep_list:- A dedicated list will be used to efficiently manage threads that are awaiting resumption, enabling quick identification and activation when their wake-up time arrives.

- Utilizing the

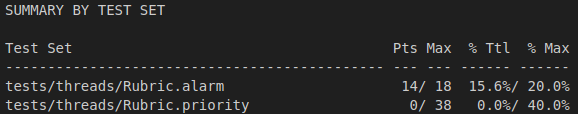

Current Problem

-

The original

timer_sleep()system call pauses a process for a specified number ofticksusing busy waitingClick to see the original code

timer_sleep (int64_t ticks) { int64_t start = timer_ticks (); ASSERT (intr_get_level () == INTR_ON); while (timer_elapsed (start) < ticks) thread_yield (); } -

This approach exhibits several inefficiencies:

- It repeatedly checks the current time in a tight loop.

- If the wake-up time has not yet arrived, it invokes

thread_yield(), which transitions the thread to theTHREAD_READYstate. - Crucially, if no other threads are present in the

ready_list, the same thread is immediately rescheduled asTHREAD_RUNNING.- This creates a continuous cycle of yielding and re-running, leading to a significant waste of CPU resources.

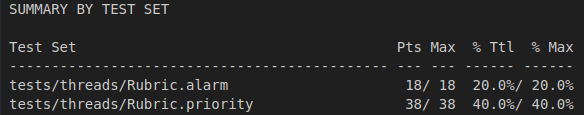

Original Grade

Solution

- To address the aforementioned problems,

timer_sleep()has been reimplemented to:- Leverage the

THREAD_BLOCKEDstate to suspend threads, preventing unnecessary CPU consumption. - Introduce a

sleep_listdata structure to effectively track and manage all sleeping threads.

- Leverage the

- The revised workflow for a

timer_sleep()call is as follows:- The calling thread is added to the

sleep_listvia a newthread_sleep()function. - The system periodically checks the current time (e.g., within the timer interrupt handler.)

- When a thread’s designated wake-up time is reached, it is moved from the

sleep_listto theready_listvia athread_wakeup()function, making it eligible for scheduling.

- The calling thread is added to the

Implementation Details

- The following components have been modified or introduced:

timer.ctimer_sleep()- The

thread_yield()call has been replaced withthread_sleep()when the process needs to sleep.Click to see the refined code

void timer_sleep(int64_t ticks) { int64_t start = timer_ticks(); ASSERT (intr_get_level () == INTR_ON); while(timer_elapsed(start) < ticks) thread_sleep(ticks); }

- The

timer_interrupt()- A call to

thread_wakeup()with the currenttimer_ticks()value has been added.Click to see the refined code

static void timer_interrupt (struct intr_frame *args UNUSED) { ticks++; thread_tick (); thread_wakeup(ticks); // wake up! }

- A call to

thread.hstruct thread- A new member,

int64_t tick_wakeup, has been added to store the specific tick at which the thread should be woken up.Click to see the refined code

struct thread { // other codes struct list_elem elem; /* List element. */ int64_t tick_wakeup; /* Tick till wake up */ // other codes }

- A new member,

thread.csleep_list- A new static list,

static struct list sleep_list, has been introduced to manage threads currently in theTHREAD_BLOCKEDstate due totimer_sleep().Click to see the refined code

/* List of all processes. Processes are added to this list when they are first scheduled and removed when they exit. */ static struct list all_list; /* List of processes in THREAD_BLOCKED state. Processes are added to this list when they are called with thread_sleep() and removed when wakeup() is called */ static struct list sleep_list;

- A new static list,

thread_init()- The

sleep_listis now initialized during thethread_init()function.Click to see the refined code

void thread_init (void) { ASSERT (intr_get_level () == INTR_OFF); lock_init (&tid_lock); list_init (&ready_list); list_init (&all_list); list_init (&sleep_list); /* Set up a thread structure for the running thread. */ initial_thread = running_thread (); init_thread (initial_thread, "main", PRI_DEFAULT); initial_thread->status = THREAD_RUNNING; initial_thread->tid = allocate_tid (); }

- The

thread_wakeup()- This function iterates through the

sleep_listand moves threads whosetick_wakeuptime has been reached to theready_list.Click to see the refined code

/* Wakes up sleeping threads by checking the sleep_list and comparing its elements to the global ticks, then activates the appropriate threads. */ void thread_wakeup(int64_t current_ticks) { enum intr_level old_level = intr_disable(); struct list_elem *current_element = list_begin(&sleep_list), *next_element = NULL; while (current_element != list_end(&sleep_list)) { struct thread *thread_to_check = list_entry(current_element, struct thread, elem); next_element = list_next(current_element); if (thread_to_check->tick_wakeup <= current_ticks) { list_remove(current_element); thread_unblock(thread_to_check); // put this thread to ready_list } current_element = next_element; } intr_set_level(old_level); }

- This function iterates through the

thread_sleep()- This function sets the calling thread’s state to

THREAD_BLOCKED, records its wake-up time (tick_wakeup), and adds it to thesleep_list. - It then calls

thread_block()to yield CPU control.Click to see the refined code

/* Sleeps for ticks. If the current thread is not the idle thread, this changes the status of the calling thread to THREAD_BLOCKED, stores the local tick for waking up, updates the global tick if necessary, and calls schedule(). */ void thread_sleep(int64_t ticks_to_sleep) { struct thread *cur = thread_current(); ASSERT (!intr_context()); enum intr_level old_level = intr_disable(); int current_ticks = timer_ticks(); if (cur != idle_thread) { cur->tick_wakeup = current_ticks + ticks_to_sleep; list_push_back(&sleep_list, &cur->elem); } thread_block(); intr_set_level (old_level); }

- This function sets the calling thread’s state to

Conclusion

- This modification successfully eliminates the inefficient busy-waiting mechanism from

timer_sleep(), leading to a significant improvement in CPU efficiency within PintOS by allowing the CPU to perform other tasks while threads are sleeping.

Part 2: Priority

Objective

- The primary objective of this section is to fundamentally transform PintOS’s scheduling mechanism.

- This involves replacing the existing FIFO scheduling with a robust priority-based system, and incorporating preemption to ensure that high-priority threads are executed promptly and effectively.

Current Problem

- FIFO Scheduling

- The original PintOS design employs a strict FIFO scheduling policy.

-

Threads are added to the

ready_listin the order of their arrival, completely disregarding their assigned priorities.Click to see the original code

void thread_unblock (struct thread *t) { enum intr_level old_level; ASSERT (is_thread (t)); old_level = intr_disable (); ASSERT (t->status == THREAD_BLOCKED); list_push_back (&ready_list, &t->elem); t->status = THREAD_READY; intr_set_level (old_level); }

- No Preemption

- A significant limitation of the current system is the absence of preemption.

- Even when a high-priority thread becomes ready (e.g., wakes up from a sleep or unblocks), it is not immediately granted CPU time if a lower-priority thread is currently executing.

- This leads to inefficient resource utilization and can cause critical delays for time-sensitive tasks.

Solution

- The proposed solution below addresses these issues:

- Creation/Unblocking:

- Priority Comparison: When a new thread is created or an existing thread is unblocked, its priority is compared with that of the currently running thread. If the new/unblocked thread possesses a higher priority,

thread_yield()is invoked to trigger a context switch. - Priority-Ordered Insertion: Threads are no longer simply pushed to the back of the

ready_list. Instead, they are inserted into theready_listin a sorted order based on their priority, ensuring that the highest-priority ready thread is always at the front.

- Priority Comparison: When a new thread is created or an existing thread is unblocked, its priority is compared with that of the currently running thread. If the new/unblocked thread possesses a higher priority,

- Level of Abstraction:

- Low-level of Abstraction: The

thread_unblock()function is low-level function. Hence it’s modified to solely handle the insertion of a thread into theready_listaccording to its priority. - Higher-level of Abstraction: The functions that have higher-level of abstraction, such as

thread_create()(when a new thread is created) orthread_wakeup()(when a sleeping thread becomes ready), are responsible for evaluating whether preemption is necessary and subsequently callingthread_yield()if a higher-priority thread has become ready.

- Low-level of Abstraction: The

- Timer Preemption:

- To enforce priority-based scheduling during timer interrupts,

intr_yield_on_return()is utilized instead of directly callingthread_yield(). This mechanism signals the interrupt handler to perform a context switch upon returning from the interrupt, allowing a higher-priority thread to be scheduled if available.

- To enforce priority-based scheduling during timer interrupts,

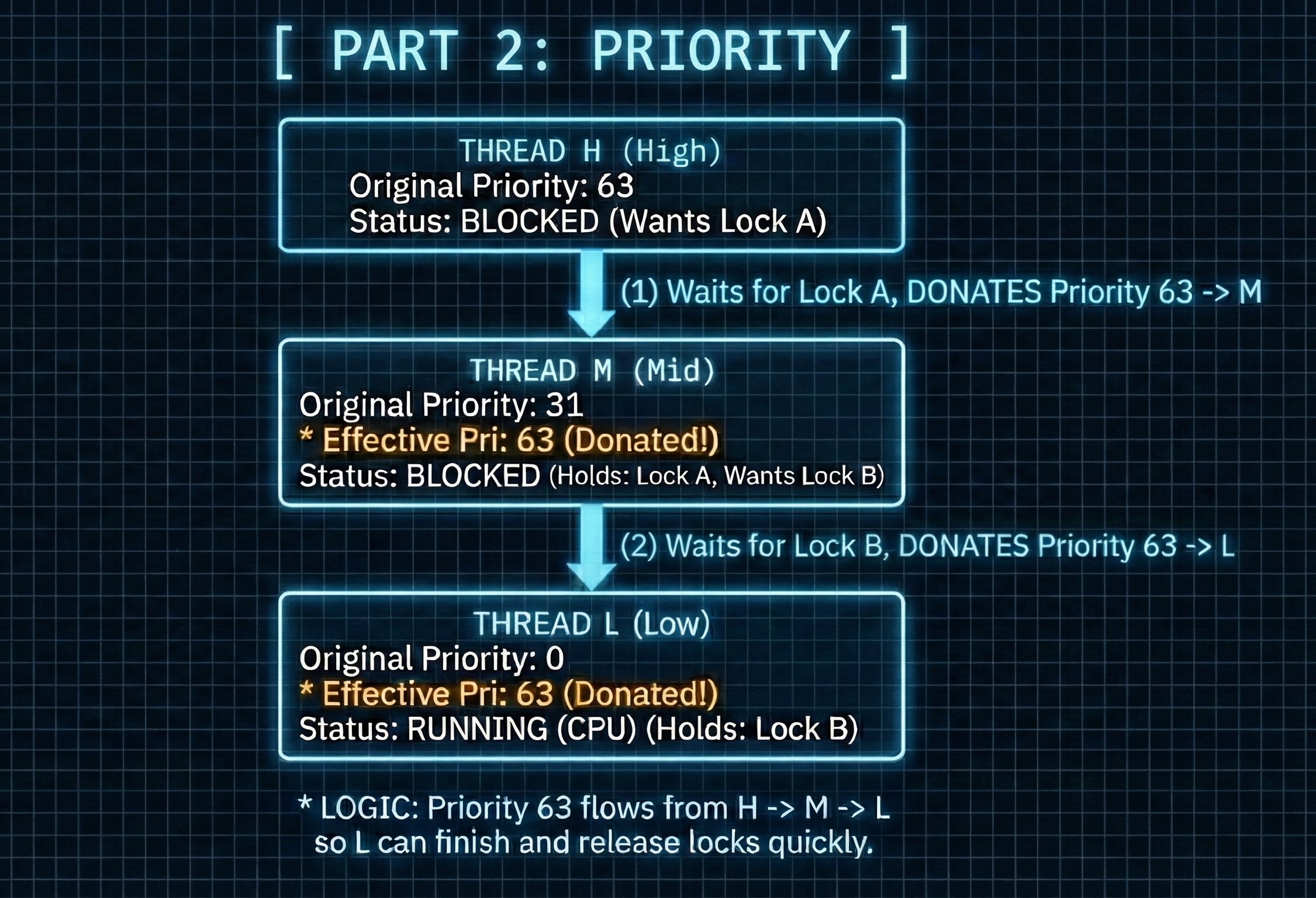

- Priority Donation:

- To mitigate the problem of priority inversion (where a high-priority thread gets blocked waiting for a resource held by a low-priority thread), additional fields (

donorsandlock_to_wait) are added to thestruct thread. This enables a mechanism where a low-priority thread temporarily inherits the priority of a high-priority thread that is blocked on a lock it holds.

- To mitigate the problem of priority inversion (where a high-priority thread gets blocked waiting for a resource held by a low-priority thread), additional fields (

- Creation/Unblocking:

Implementation Details

- The following components have been modified or introduced:

thread.hstruct thread- New members

lock_to_wait,original_priority,donors, anddonelemhave been added to facilitate priority donation and tracking.Click to see the refined code

struct thread { // other codes int64_t tick_wakeup; /* Tick till wake up */ struct lock *lock_to_wait; /* Address of the lock to wait */ int original_priority; /* Original Priority value */ struct list donors; /* Doners for priority inversion */ struct list_elem donelem; /* List element for donors list */ // other codes };

- New members

thread.cinit_thread()- The new fields

wait_to_lock,original_priority, anddonorsare initialized for each new thread.Click to see the refined code

static void init_thread (struct thread *t, const char *name, int priority) { enum intr_level old_level; ASSERT (t != NULL); ASSERT (PRI_MIN <= priority && priority <= PRI_MAX); ASSERT (name != NULL); memset (t, 0, sizeof *t); t->status = THREAD_BLOCKED; strlcpy (t->name, name, sizeof t->name); t->stack = (uint8_t *) t + PGSIZE; t->priority = priority; t->magic = THREAD_MAGIC; t->lock_to_wait = NULL; t->original_priority = priority; list_init(&t->donors); old_level = intr_disable (); list_push_back (&all_list, &t->allelem); intr_set_level (old_level); }

- The new fields

thread_sleep()- Threads are now inserted into the

sleep_listin an ordered fashion, prioritizing those with earlier wake-up times and higher priorities.Click to see the refined code

void thread_sleep(int64_t ticks_to_sleep) { struct thread *cur = thread_current(); ASSERT (!intr_context()); enum intr_level old_level = intr_disable(); int current_ticks = timer_ticks(); if (cur != idle_thread) { cur->tick_wakeup = current_ticks + ticks_to_sleep; struct list_elem *current_element = list_begin(&sleep_list); while (current_element != list_end(&sleep_list)) { struct thread *thread_current_element = list_entry(current_element, struct thread, elem); if (thread_current_element->priority < cur->priority || ((thread_current_element->priority == cur->priority) && (thread_current_element->tick_wakeup > cur->tick_wakeup))) break; current_element = list_next(current_element); } list_insert(current_element, &cur->elem); } thread_block(); intr_set_level (old_level); }

- Threads are now inserted into the

thread_wakeup()- This function now unblocks threads based on both their wake-up ticks and priority, and actively checks for preemption opportunities.

Click to see the refined code

void thread_wakeup(int64_t current_ticks) { enum intr_level old_level = intr_disable(); struct list_elem *current_element = list_begin(&sleep_list), *next_element = NULL; while (current_element != list_end(&sleep_list)) { struct thread *thread_to_check = list_entry(current_element, struct thread, elem); next_element = list_next(current_element); if (thread_to_check->tick_wakeup <= current_ticks) { list_remove(current_element); thread_unblock(thread_to_check); // put this thread to ready_list } current_element = next_element; } intr_set_level(old_level); }

- This function now unblocks threads based on both their wake-up ticks and priority, and actively checks for preemption opportunities.

thread_yield()- The logic for inserting the current thread back into the

ready_listhas been modified to ensure it is placed according to its priority, maintaining the sorted order of the list.Click to see the refined code

void thread_yield (void) { struct thread *cur = thread_current (); enum intr_level old_level; ASSERT (!intr_context ()); old_level = intr_disable (); if (cur != idle_thread) { bool is_inserted = false; for(struct list_elem *current_element = list_begin(&ready_list), *end_element = list_end(&ready_list); current_element != end_element; current_element = list_next(current_element)) { struct thread *thread_current_element = list_entry(current_element, struct thread, elem); if (thread_current_element->priority < cur->priority) { list_insert(current_element, &cur->elem); is_inserted = true; break; } } if(is_inserted == false) list_push_back(&ready_list, &cur->elem); } cur->status = THREAD_READY; schedule (); intr_set_level (old_level); }

- The logic for inserting the current thread back into the

thread_set_priority()- This function not only sets a thread’s priority but also incorporates logic for managing priority donation and triggering preemption if the new priority is higher than that of the currently running thread at the front of the

ready_list.Click to see the refined code

void thread_set_priority (int new_priority) { struct thread* current_thread = thread_current(); if(list_empty(¤t_thread->donors)) current_thread->priority = new_priority; else { if(current_thread->priority < new_priority) current_thread->priority = new_priority; } current_thread->original_priority = new_priority; if(list_empty(&ready_list) == false && current_thread->priority < list_entry(list_front(&ready_list), struct thread, elem)->priority) thread_yield(); }

- This function not only sets a thread’s priority but also incorporates logic for managing priority donation and triggering preemption if the new priority is higher than that of the currently running thread at the front of the

thread_unblock()- This function now inserts the unblocked thread into the

ready_listin the correct priority order, rather than simply appending it.Click to see the refined code

void thread_unblock (struct thread *t) { enum intr_level old_level; ASSERT (is_thread (t)); old_level = intr_disable (); ASSERT (t->status == THREAD_BLOCKED); bool is_inserted = false; for(struct list_elem *current_element = list_begin(&ready_list), *end_element = list_end(&ready_list); current_element != end_element; current_element = list_next(current_element)) { struct thread *thread_current_element = list_entry(current_element, struct thread, elem); if (thread_current_element->priority < t->priority) { list_insert(current_element, &t->elem); is_inserted = true; break; } } if(is_inserted == false) list_push_back(&ready_list, &t->elem); t->status = THREAD_READY; intr_set_level (old_level); }

- This function now inserts the unblocked thread into the

thread_create()- After a new thread is created and unblocked, this function now includes a check to determine if the newly created thread has a higher priority than the currently running thread.

- If so, it triggers

thread_yield()to allow immediate preemption.Click to see the refined code

tid_t thread_create (const char *name, int priority, thread_func *function, void *aux) { struct thread *t; struct kernel_thread_frame *kf; struct switch_entry_frame *ef; struct switch_threads_frame *sf; tid_t tid; ASSERT (function != NULL); /* Allocate thread. */ t = palloc_get_page (PAL_ZERO); if (t == NULL) return TID_ERROR; /* Initialize thread. */ init_thread (t, name, priority); tid = t->tid = allocate_tid (); /* Stack frame for kernel_thread(). */ kf = alloc_frame (t, sizeof *kf); kf->eip = NULL; kf->function = function; kf->aux = aux; /* Stack frame for switch_entry(). */ ef = alloc_frame (t, sizeof *ef); ef->eip = (void (*) (void)) kernel_thread; /* Stack frame for switch_threads(). */ sf = alloc_frame (t, sizeof *sf); sf->eip = switch_entry; sf->ebp = 0; /* Add to run queue. */ thread_unblock(t); // now preform preemption if needed if(t->priority > thread_current()->priority) thread_yield(); return tid; }

synch.csemaphore_elem- A

priorityfield has been added tostruct semaphore_elemto enable priority-based waiting on semaphores.Click to see the refined code

/* One semaphore in a list. */ struct semaphore_elem { struct list_elem elem; /* List element. */ struct semaphore semaphore; /* This semaphore. */ int priority /* Priority for scheduling*/ };

- A

sema_down()- It’s worth noting that

sema_down()is not modified- because priority matters when

sema_up()is called

- because priority matters when

- It’s worth noting that

sema_up()- This function now sorts the

waiterslist based onprioritybefore unblocking the highest-priority thread. - It also performs preemption if the unblocked thread has a higher priority than the currently running thread.

Click to see the refined code

void bool compare_priority_for_waiters(const struct list_elem *lhs, const struct list_elem *rhs, void *aux UNUSED) { struct thread *thread_left = list_entry(lhs, struct thread, elem), *thread_right = list_entry(rhs, struct thread, elem); return thread_left->priority > thread_right->priority; } void sema_up (struct semaphore *sema) { enum intr_level old_level; ASSERT (sema != NULL); old_level = intr_disable (); struct thread *thread_to_unblock = NULL; if (!list_empty (&sema->waiters)) { // sort list list_sort(&sema->waiters, compare_priority_for_waiters, NULL); thread_to_unblock = list_entry (list_pop_front (&sema->waiters), struct thread, elem); thread_unblock(thread_to_unblock); } sema->value++; if(!intr_context() && thread_to_unblock != NULL && thread_to_unblock->priority > thread_current()->priority) thread_yield(); intr_set_level (old_level); }

- This function now sorts the

lock_acquire()- This function now incorporates the priority donation mechanism.

- When a thread attempts to acquire a locked resource,

- if the holder’s priority is lower, the holder temporarily inherits the priority of the acquiring thread to prevent priority inversion.

- It also tracks the lock that the current thread is waiting for.

Click to see the refined code

void lock_acquire (struct lock *lock) { ASSERT (lock != NULL); ASSERT (!intr_context ()); ASSERT (!lock_held_by_current_thread (lock)); struct thread *current_thread = thread_current(); if(lock->holder != NULL) { for(struct lock *current_lock = lock; current_lock != NULL && current_lock->holder->priority < current_thread->priority; current_lock = current_lock->holder->lock_to_wait) current_lock->holder->priority = current_thread->priority; if(lock->holder->original_priority < current_thread->priority) list_push_back(&lock->holder->donors, ¤t_thread->donelem); } current_thread->lock_to_wait = lock; sema_down (&lock->semaphore); lock->holder = current_thread; current_thread->lock_to_wait = NULL; }

lock_release()- Upon releasing a lock, this function manages the removal of priority donors and restores the original priority of the lock holder if no higher-priority donations are active.

- It also correctly handles scenarios involving multiple priority donations.

Click to see the refined code

void lock_release (struct lock *lock) { ASSERT (lock != NULL); ASSERT (lock_held_by_current_thread (lock)); bool is_donor_gone = false; struct thread *thread_current_element = NULL; for(struct list_elem *current_element = list_begin(&lock->holder->donors), *end_element = list_end(&lock->holder->donors); current_element != end_element;) { thread_current_element = list_entry(current_element, struct thread, donelem); // handles multiple donation if (thread_current_element->lock_to_wait == lock) { current_element = list_remove(current_element); is_donor_gone = true; } else current_element = list_next(current_element); } struct thread *current_thread = thread_current(); if(is_donor_gone == true) current_thread->priority = current_thread->original_priority; for(struct list_elem *current_element = list_begin(&lock->holder->donors), *end_element = list_end(&lock->holder->donors); current_element != end_element; current_element = list_next(current_element)) { thread_current_element = list_entry(current_element, struct thread, donelem); if (thread_current_element->priority > current_thread->priority) current_thread->priority = thread_current_element->priority; } lock->holder = NULL; sema_up (&lock->semaphore); }

cond_wait()- The

waiterslist for conditions is now maintained in priority order, ensuring that the highest-priority waiting thread is signaled first.Click to see the refined code

void cond_wait (struct condition *cond, struct lock *lock) { struct semaphore_elem waiter; ASSERT (cond != NULL); ASSERT (lock != NULL); ASSERT (!intr_context ()); ASSERT (lock_held_by_current_thread (lock)); sema_init (&waiter.semaphore, 0); waiter.priority = lock->holder->priority; bool is_inserted = false; for(struct list_elem *current_element = list_begin(&cond->waiters), *end_element = list_end(&cond->waiters); current_element != end_element; current_element = list_next(current_element)) { struct semaphore_elem *semaphore_current_element = list_entry(current_element, struct semaphore_elem, elem); if (semaphore_current_element->priority < waiter.priority) { list_insert(current_element, &waiter.elem); is_inserted = true; break; } } if(is_inserted == false) list_push_back(&cond->waiters, &waiter.elem); lock_release (lock); sema_down (&waiter.semaphore); lock_acquire (lock); }

- The

Conclusion

- This comprehensive enhancement successfully introduces priority scheduling, preemption, and a robust priority donation mechanism into PintOS.

- These changes significantly improve the operating system’s responsiveness and efficiency by ensuring that high-priority tasks are executed in a timely manner and by effectively addressing the challenges posed by priority inversion.

Improved Grade

Final Thoughts

- Together, these improvements fundamentally transform PintOS into a more robust and efficient operating system.

- By optimizing resource utilization through intelligent thread management and prioritizing critical tasks, PintOS now offers enhanced responsiveness and overall performance.

Leave a comment